The European Parliament has voted to adopt its negotiating position on the proposed Artificial Intelligence (AI) Act, with an overwhelming 499 votes in favor, 28 against, and 93 abstentions. This milestone now paves the way for forthcoming discussions with EU member states to finalize the legislation, which aims to ensure the widespread adoption of human-centric and trustworthy AI while safeguarding health, safety, fundamental rights, and democracy from potentially harmful effects.

Highlighting the significance of these developments, co-rapporteur Brando Benifei said, “We want AI’s positive potential for creativity and productivity to be harnessed, but we will also fight to protect our position and counter dangers to our democracies and freedoms during the negotiations with the Council.”

Ban on AI for biometric surveillance and predictive policing

One of the key aspects of the AI Act is its focus on addressing prohibited AI practices. Taking a risk-based approach, the rules establish clear obligations for AI providers and users based on the level of risk associated with the AI systems. The aim is to prohibit AI systems that pose an unacceptable risk to public safety and well-being.

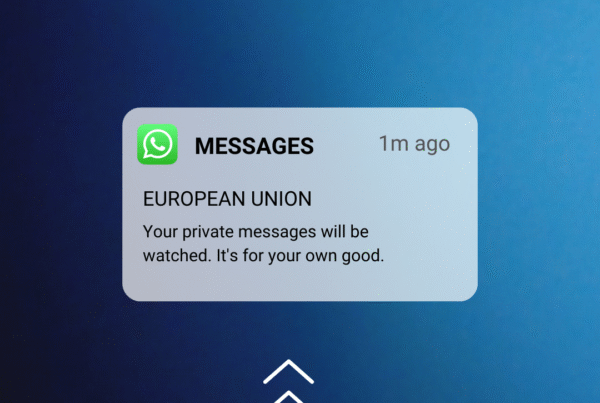

The European Parliament has expanded the list of prohibited practices to include intrusive and discriminatory uses of AI. This includes “real-time” remote biometric identification systems in publicly accessible spaces and post-remote biometric identification systems, except for specific law enforcement purposes with judicial authorization. Additionally, the legislation prohibits biometric categorization systems that rely on sensitive characteristics such as gender, race, ethnicity, citizenship status, religion, or political orientation.

MEPs have also addressed concerns surrounding predictive policing systems, which rely on profiling, location, or past criminal behavior. These systems have been added to the list of banned AI practices due to their potential for discrimination and violation of fundamental rights. Furthermore, emotion recognition systems used in law enforcement, border management, the workplace, and educational institutions are prohibited, as they raise concerns about privacy and the potential for misuse.

Classifying AI models

Another critical aspect of the AI Act is the inclusion of high-risk AI applications. The legislation now classifies AI systems that pose significant harm to human health, safety, fundamental rights, or the environment as high-risk. This classification extends to AI systems used for influencing voters and the outcome of elections. Additionally, recommender systems employed by social media platforms with over 45 million users have been added to the high-risk category.

In parallel, the AI Act establishes obligations for providers of general-purpose AI, particularly focusing on foundation models that represent a fast-evolving field in AI development. These providers will be required to assess and mitigate potential risks related to health, safety, fundamental rights, the environment, democracy, and the rule of law. They must also register their models in the EU database before releasing them into the European market.

Balancing innovation and human rights

To support innovation and safeguard citizens’ rights, the AI Act includes provisions for exemptions in research activities and AI components provided under open-source licenses, specifically benefiting small and medium-sized enterprises (SMEs). The legislation also promotes the establishment of regulatory sandboxes, enabling public authorities to test AI in real-life environments before its deployment.

As negotiations proceed between the European Parliament and EU member states, the proposed AI Act holds the promise of establishing groundbreaking regulations to guide the safe and transparent deployment of AI, while upholding Europe’s core values and protecting its citizens’ interests.